Hi there - I’m Jason, a member of the team making Wayward Strand.

It’s been a little while since our announcement on IndieDB, and we’re excited to share a bit more about Wayward Strand and its development process, especially now that we are getting closer to finishing and releasing it. As we’ve been developing Wayward Strand for a few years now, this post is a mix of what we’re focusing on right now, and catching you up to where our team is at with our tech and development process.

By the way, if you’ve never heard of Wayward Strand, that announcement is a great place to start!

So, one of the challenges we had to overcome when deciding to make a fully-3D real-time narrative game with complex, animated characters as an indie team was: ...how?

This is something that’s been a conundrum for indie developers of narrative games for a while now, with some opting to forgo visualising characters all-together. For instance Fullbright, the creators of Gone Home and Tacoma, are only just including fully visualised characters in their upcoming Open Roads, and in their previous games, they cleverly designed around the need to include fully visualised characters to tell their stories.

While our answer to this was partially just ‘let’s just do it and see how we go’, and we’ve run into plenty of trouble along the way, we have found what I think is a pretty good combination of existing / open-source tools, which has enabled us to create a game of the size and scope that we had been dreaming of.

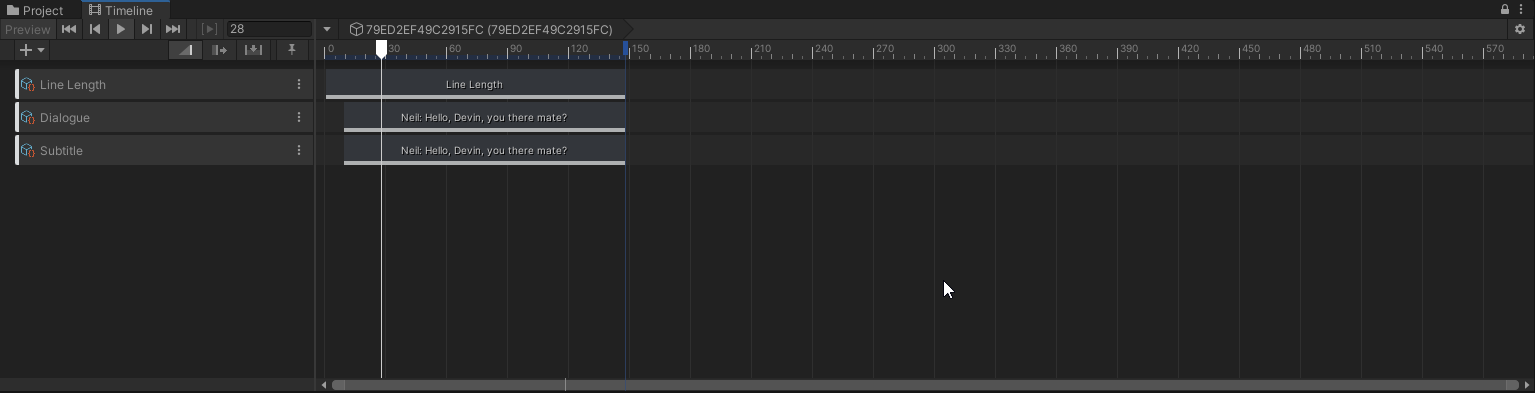

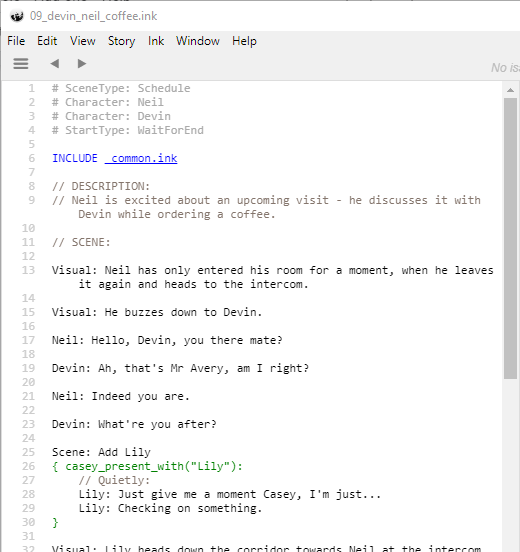

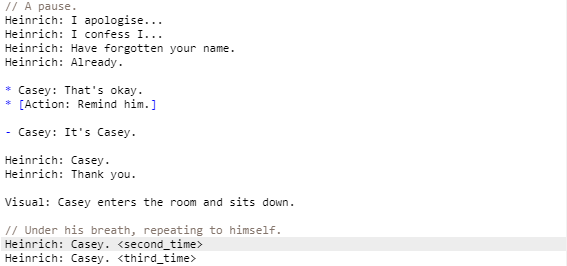

The first of these is ink - Inkle’s scripting language, which they’ve very generously provided as an open-source tool for developers. Ink fulfills a similar role to narrative tools such as Twine or Yarn Spinner - it allows you to construct complex interactive narratives in a way where you can easily plug it into a game engine. Astrologaster writer Katharine Neil has a great write-up of how to integrate ink, as well as Twine and Yarn Spinner, with Unity.

One of our scene files, open in the Inky editor.

There are many reasons we love ink, but one in particular is the narrative momentum that it encourages through its design. Writing with ink feels like less like designing a circuit board than writing a script for theatre or the screen - while it can handle any level of complexity you need, your initial focus is the blank page and the written word, and you can add interactive complexity as you go.

Incidentally, Inkle have just released Overboard! - a murder mystery game that uses ink as the backbone for its dynamic narrative - check it out! And if you use ink or think it’s a cool tool, definitely consider supporting them on Patreon.

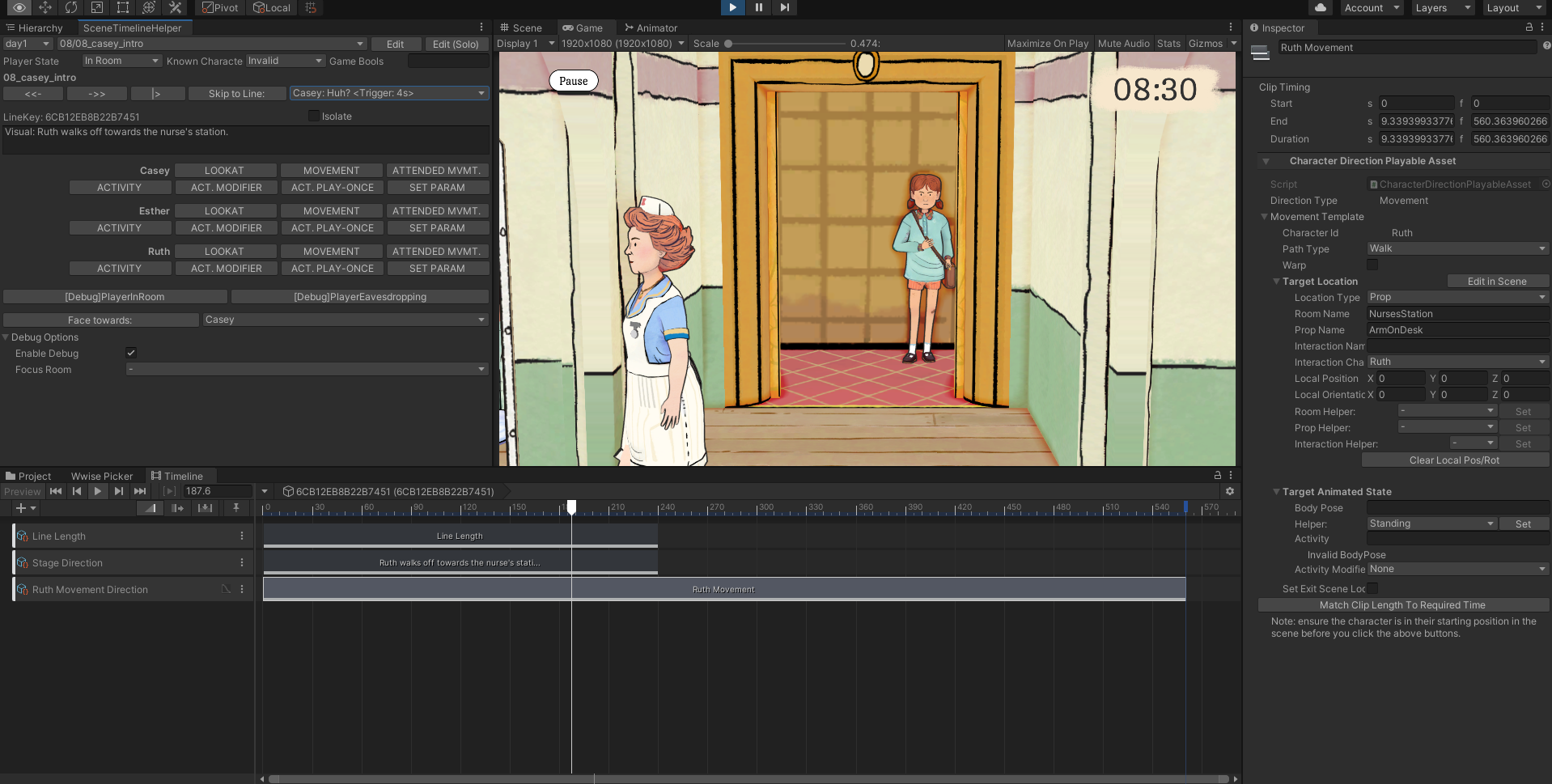

We are using ink in tandem with Unity’s Timeline tool.

Timelines are a feature of Unity that allow you to synchronise clips that they call “playables” to a time axis - it’s basically a programmable sequencer for events that can happen in your game, like animations playing, characters moving around, sounds triggering, etc. If you’d like to learn more about Timeline and how to use it, this post by Robert Yang is a great place to start.

So, how have we plugged these two different tools together for Wayward Strand?

Early on, we decided that the ink Line would be the key building block of the game. This would limit us in terms of ink’s capability to dynamically build lines based on logic, but this is a limitation we would have to take on anyway to include voice-over, and also to make localisation easier - including support for each of these was a goal for the game from an early stage.

From there, we planned out our integration with Unity. We wanted the ability to ‘direct’ our interactive scenes - to be able to modify any part of it, including being able to quickly and easily direct the timing of any aspect of the scene.

We also needed a way to trigger animations, character look-at changes, camera movements, and other minor Unity details - and while we could have run all of that stuff from ink, we were keen to draw a line between the writing process and the ‘scene direction’ process, which would allow us to focus on each of these processes in turn.

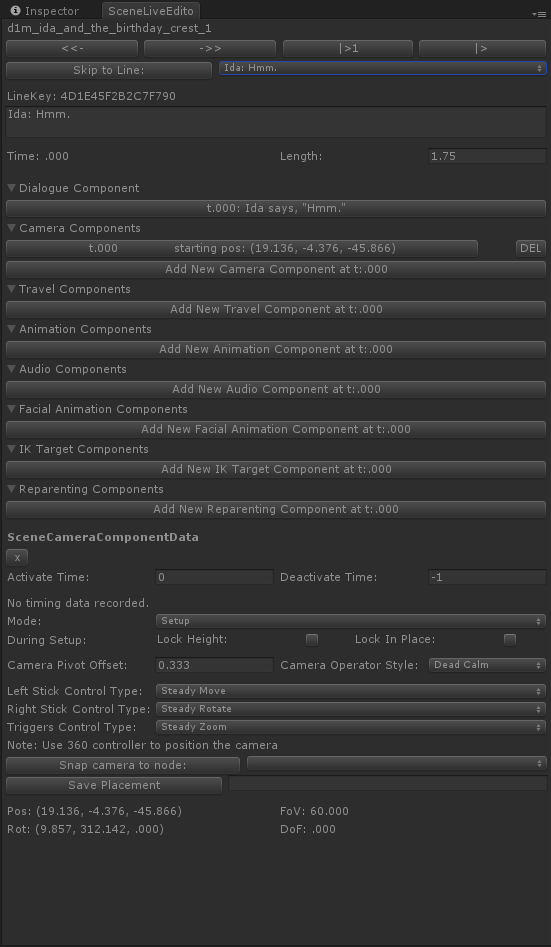

Our old line-editing tool, including a few features that we’ve cut to reduce scope.

Our initial integration actually happened before Timeline was released - we developed a data structure on the Unity side which stored data per ink Line, in arrays of “components” (so, each line could have a Dialogue component, multiple Movement components, and so forth). This component data was automatically created for each line as ink files were compiled, in a dictionary linked to the ink file.

When Timeline was released, we quickly switched over, starting with a similar solution. We realised we could (with a bit of hackery, at the time), automatically generate Timeline assets, and we started by creating them for each ink Line, as we were doing before. While this is a fairly brute-force solution, and working with the scenes in Unity line-by-line can sometimes be counter-intuitive, it has lasted us over the course of the project.

So, our current pipeline is as follows:

1. We’ve created a custom version of the ink compiler, which creates a unique line identifier based off of the text in the line, the current knot/stitch, and a unique identifier for the ink file that the line is in. It then prefixes this unique line identifier to the line itself.

A fun little issue with generating the unique line identifier is that if any line is exactly the same as another in a particular scene or knot, it will be generated with the same id, so we have to add a “differentiator” on to the end of them - a little tag that denotes which of the two lines it is.

Another scene from the game.

Our modified compiler also generates a custom json file, alongside the ink json file, which includes a list of all the ink lines, so that we can easily process them on the Unity side. (You can see all our semi-hacky modifications to the ink compiler here.)

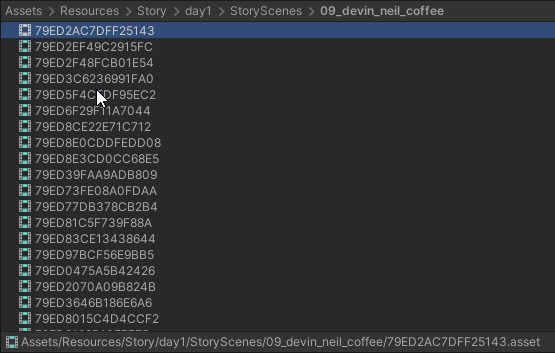

2. In Unity, we parse the ink Story to collect tag information about the scene, or knots inside the scene, and then we use the ink Line list from the custom json file to generate a Timeline asset for each line.

Caption: Generated Timeline assets for the scene in the Unity project view.

These Timeline assets get set up with a few default tracks and clips:

- a LineLength track/clip, which determines how long until the next line in the scene should be triggered (the length of time that a line lasts for is initially set to a value that’s calculated based on the amount of characters in the line);

- a Dialogue track/clip, which cues our VO when the clip is triggered, as well as handling our character mouth shapes and talking-animation-related behaviour (including head-turning);

- a Subtitle track/clip, which is used to trigger our speech bubbles, and also potential subtitles when that accessibility option is activated (these clips process the Line through a Dialogue system that handles the potential for translation).

3. Finally, we have a scene direction tool that exists alongside the Timeline, which allows us to easily move between lines in the scene (backward, forward, or jumping to a particular line), as well as displaying a bunch of helpful buttons that quickly add additional clips to the timeline - animation state change clips, movement clips, character head look-at clips, and so forth.

What this fundamentally means is that, when we go from our ink script, across to Unity, the majority of the data is already set up for us. Characters may not move in the scene until a scene direction pass happens, but they’ll talk with each other, Casey’s options will pop up on screen when they’re meant to, and the scene can be played from start to finish.

It also means that, when doing the scene directing pass, most of the data already exists, ready to be tweaked. We can quickly drag the LineLength clips around to get some more interesting behaviour occurring (like characters speaking over the top of one another, or a weighty pause before a response), and the only data we need to ‘create’ (other than movement data) is generally the finer-detail scene direction.

There are some other fun details that I haven’t covered here - like how we respond to edits in our ink scenes after they’ve been imported once, or how we link our Playable clips to particular characters without using Timeline’s ‘binding’ functionality - but, even though we clearly have put a lot of effort in to make this all work, it still feels like a significant amount of the heavy lifting is being done by Timeline and ink - we certainly couldn’t have done all of this as well as building a scripting language and a scriptable sequencer.

This pipeline has been years in the making for our small team, and describing it has made me extra proud of all the work we’ve put in. Look forward to sharing more over the next few months!