Introduction

I have created a “word with labels” system which allows the kids to learn every single word, instead of learning only patterns. The kids have also been able to respond!

Overfitting models

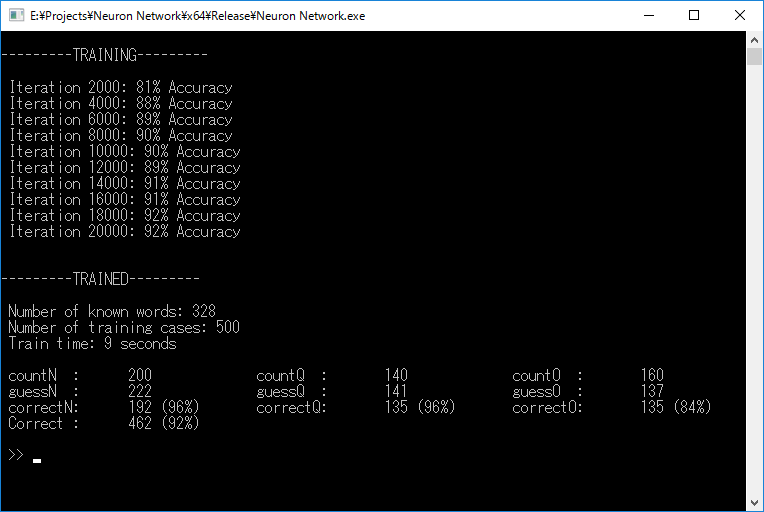

In the last article, my neural network model seemed to underfit the training data set, with an accuracy of only 77%-79%. It turned out that the model was alright. To improve the algorithm’s performance, all I need was to change Visual Studio’s solution configuration from Debugx86 to Releasex64 then run 20000 iterations of Gradient Descent. Last time, it took the program almost 100 seconds to run 600 iterations, now it takes roughly 10 seconds to run 20000 iterations with over 90% of accuracy.

Passing 90% is always good thing, but not necessarily good enough. It could be very annoying for the player if the AIs “accidentally” fail to recognize simple patterns like “Good morning” or “Are you hungry?”. So I want to get as close to 100% accuracy as possible. I will largely increase the number of known words / number of training cases ratio and hopefully the algorithms will overfit according to known words. Then all we need is to feed more words and training data. The approach is not that hard to implement as for a single word, we can automatically create many different type of sentences which contain that word. And it works for the fact that we should not expect the kids to understand sentences which contain too many unknown words to them, while they are expected to understand sentences that they have learned before. In short, we’re heading for overfitting models with a lot of data for our neural networks. Also for this reason, I’ve added another hidden layer then changed the neural network’s size from 15-10-6 to 15-12-12-6 to help fit our training set better.

A “Word with Labels” System

To help the kids interpret words and sentences better, I’ve built a system which marks a word with one or more labels.

For example, the word “you” is labeled “listener”, “good” is labeled “adj”, “fish” is labeled “animal” and “food/drink”, etc.

This already helps the kids find the subject and object of simple questions like “What is your name?” and seems to be very helpful in the future.

Wait? If we label words, then shouldn’t a parsing system work too? Many games have succeeded using this approach, right?

We will have to stick to Neural Networks and other Machine Learning techniques to simulate the learning process of the kids. These techniques also allow the kids to learn many different kind of complex sentences and expressions. At the end of the day, both approaches should work if done correctly but I still prefer Machine Learning because it’s simply fun and interesting as hell.

For now, we have 14 labels, which can cover many words already.

enum EnumWordLabel {

// Can be adjective (good, beautiful,...)

word_label_adj = 0,

// Can be verb (help, take,...)

word_label_verb = 1,

// Can be noun (name, age, color,...)

word_label_noun = 2,

// Can be place (room, house, mountainside,...)

word_label_place = 3,

// Can be time (morning, afternoon, o'clock,...)

word_label_time = 4,

// Can be tool (pen, gun, sword,...)

word_label_tool = 5,

// Can be object (table, machine,...)

word_label_object = 6,

// Can be human (miner, worker,...)

word_label_human = 7,

// Can be animal (cat, dog,...)

word_label_animal = 8,

// Can be plant (tree, flower,...)

word_label_plant = 9,

// Can be food or drink (cookies, water,...)

word_label_food_drink = 10,

// Can refer to the listener

word_label_listener = 11,

// Can refer to the speaker

word_label_speaker = 12,

// Can be other (what, who,...)

word_label_other = 13,

};Since the kids don’t know English at the beginning and only gradually learn it, we still have plenty of time to gather words and label them. And since we split the game into smaller games and chapters, we can still work on the word database after releasing the first few ones.

More Sentence Types

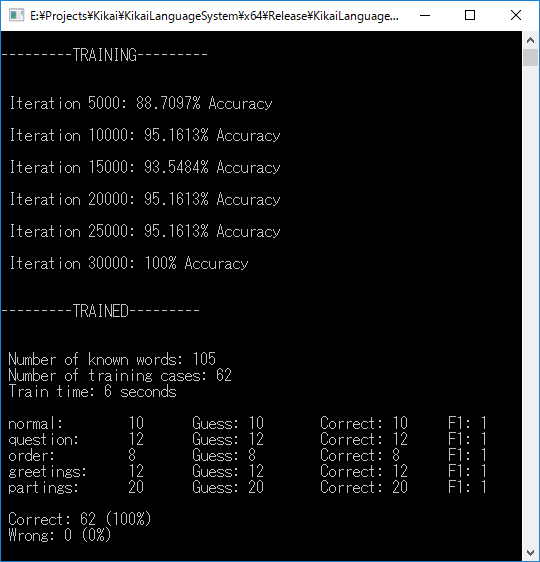

We have added two more types of sentences: greetings and partings.

With “Hello”, “Hey there”, “Good morning”, etc marked as greetings and “Bye bye”, “See you later”, “Goodbye”, etc marked as partings.

The neural net has been working well so far:

Do not worry when you see the ratio of known words over training cases is too high, most known words are not yet used in the training cases. You can also see here that I

now use the F1 score to judge the performance of the algorithm, instead of using only recall in the past.

Coming up with Responses

The kids have been able to respond, but only to simple sentences that they can easily interpret. They are not yet aware of the context of the conversation too. I will cover this topic more in-depth in later articles, once the system is highly improved.

Autocorrect the Input Sentence

I’m using Dynamic Programming (Minimum Edit Distance) to autocorrect words from the input sentence the player types in.

I’m currently running through all of the known words and try to match the input words with the one similar to it in size and characters. For example it will try to match “wat” with “what” or “liek” with “like” but not “watttttttttttttttt” with “what” or “tone” with “come”. The algorithm is very beautiful and have great performance after adding a few if else here and there. It is potentially slow once the number of known words becomes very big though. Any suggestions here are well appreciated!