Why Are We Building An AI Engine?

[Watch the Dystopia game teaser here and learn more about the world of Dystopia.]

After producing five game prototypes and one officially released mobile game (checkout our games section on our website), we were trying to determine our next move. We learned many lessons from these prototypes and one release which allowed us to identify what we were good at, what our constraints/scope were for projects as a team, and our weaknesses. The next logical step was to conduct a SWOT (strength, weakness, opportunity, threats) analysis to help us identify what our next project should be. In so doing, we came up with the project code named Dystopia. It capitalizes on our team strengths, minimizes our weaknesses and takes advantage of numerous opportunities in the indie game market. So the AI engine became a requirement for project Dystopia. Since then, I’ve spent an average of 8hrs a week over the last 3 months designing and implementing an AI engine. To chart this course I wrote out 3 simple objectives for the engine:

- Must allow AI to sense (e.g. receive sounds, visions) their environment

- Must allow AI to interact and affect their environment

- Must allow AI to engage the player in unique and exciting ways

These are high level objectives that served as a roadmap for the last three months. I summarize each objective as sensing, action execution, and planning respectively. I’ve managed to tackle the first 2 objectives and lay a foundation for the third. This first video demonstrates (AI Engine Blog Video One) a baselining of the engine. The baseline provides the basic necessities for any AI to exist in a game. More importantly, the baseline lays the foundation for novel capabilities to be incrementally built into the engine.

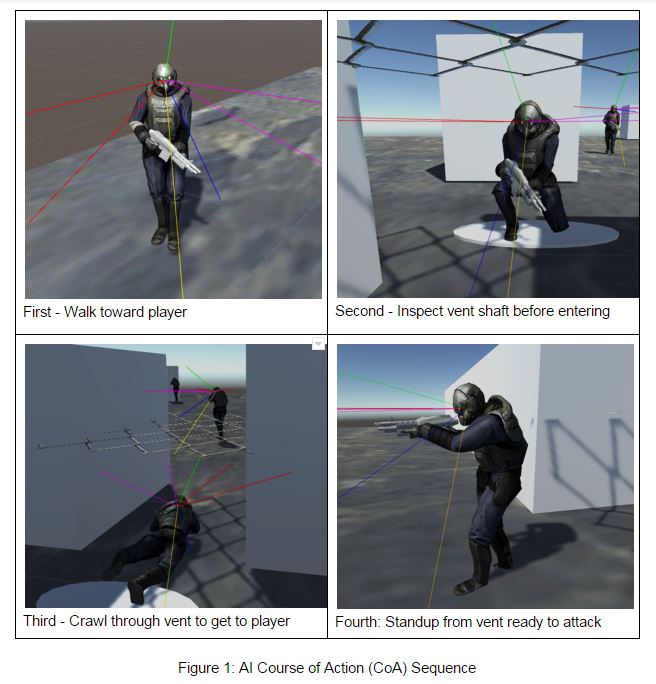

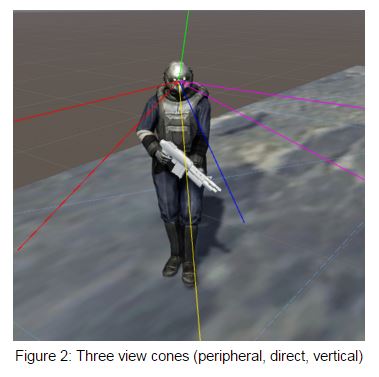

One crucial thing I’d like to point out is how the Unity3D engine has allowed us to focus our team resources on innovation. The Unity3D engine provides indie game developers with the ability to focus on designing games and less on implementing core engine technologies at a significantly low cost. For example, Unity’s animation state machine mecanim and pathfinding capabilities. As the sole AI engine developer on the team, with a small number of hours to work, not having to develop a custom animation state machine system and path finding system, allows the team to focus on building novel systems to support innovative game mechanics. The current baseline of the engine achieves objectives (1) and (2). Our AI now have the ability of hearing and seeing things in their environment and executing actions or interacting with the environment. These objectives were achieved through development of a sensor manager, event manager, and a cognitive architecture that incorporates cognition states and action trees. The integration of these systems with Unity’s pathfinding and mecanim components completed the baseline. The combined effort of these system components are demonstrated in the video. At the start of the scene illustrated in step one of Fig 1., the AI loads a scripted course of action (CoA). This CoA is determined by the state the AI is currently in and is identified as the best CoA for achieving some goal. The state dictates the goal the AI is trying to achieve. In this case, the AI is in an attack state, and we wanted to simulate a CoA for meleeing the player. The CoA is a set of action trees. Each action tree as executed, will drive Unity’s pathfinding and mecanim system. Yes, our action trees support compound and sequential action execution. The colorful rays protruding from the AI, Fig 2., are their multiple view cones that model peripheral vision, direct vision, and vertical up and down vision . We have a debugging script that allows us to visualize the cones so we know where they are looking when vision signals are received.

The colorful rays protruding from the AI, Fig 2., are their multiple view cones that model peripheral vision, direct vision, and vertical up and down vision . We have a debugging script that allows us to visualize the cones so we know where they are looking when vision signals are received.

With an AI engine baseline now implemented, we are busily designing and implementing more AI actions (e.g. open doors, room inspections, etc). In the very near future we will release a new video showcasing a player versus AI scenario, where the AI has a larger set of actions at its disposal to engage the player. Stay tuned-in by visiting our AI Engine development blog.