What this series is not about is an OpenGL/make-a-game tutorial. Don't get me wrong, I'd love to write that someday. A lot of what I do day to day is inspired (and, in certain cases, blatantly frankensteined) from a lot of the people providing free, great tutorial content online. I will link a couple of the major influences at the end of this post, and I encourage you to go check 'em out if you are into graphics work. But I don't yet want to produce a tutorial without a game under my belt. I'm still a bumbling monkey.

So, let's talk OpenGL. What is OpenGL? It's a group of specifications (more on this word choice in a bit) that is created by the Khronos Group that, in essence, allows you to interface with your graphics hardware so you can have pretty pictures show up on your screen. Graphics hardware is a specialized processor that exists somewhere in your computer that outputs a signal that is then interpreted by your monitor. There is some kind of graphics processor somewhere in your computer. If you didn't have it, you couldn't read this.

Because graphics hardware is supposed to push pictures on your screen rapidly, they are built in such a way as to optimize that kind of operation. They have a lot of cores, which means they can execute a lot of simultaneous commands rapidly, but do not have the great memory controllers that processors have. So they are great at running lots of "simple" calculations rapidly, but complicated instructions don't go over well. Graphics processors do not like interrupts or syncs with the host system. They also do not play very nice with memory transfer – a lot of graphics design involves minimizing the amount of stuff you have to tell the graphics hardware. Seasoned programmers will note that I'm talking about AZDO here.

So think of the graphics processor as the super brawny but dumb enforcer, and the CPU as the brains of the operation. That's the analogy I use with my parents when they asked me about my college thesis, so it should work for my loving audience.

Like I said, you already must have a graphics processor of some flavor. There are 3 vendors of graphics cards on the market primarily: Intel, AMD, and Nvdia. Intel makes primarily integrated graphics processors that grace economical solutions. More expensive computers, in general, will have what we call discrete graphics: an AMD or Nvdia card that slots into a PCIE slot. You know, the thing you can't buy because the crypto people are hogging all the cards in the market.

So what, you might ask. Why is it of any concern to you as a game dev what brand of card I have? They all have drivers that support OpenGL, so who cares what my card vendor is?

OpenGL is a set of specifications. It's a group of function calls that are supposed to do more or less the same thing with every card. But cards have their own drivers (because the architecture of the chips are proprietary), and these drivers are supposed to translate OpenGL command calls into stuff that the card can understand. These drivers sometimes take liberties.

Before I move on, I want to talk about my personal development setup. I have 2 monitors, one being driven by an Nvidia GTX980, and another by Intel's HD 4600. This is a really bizzare way to run things, but it's gonna make sense in a bit.

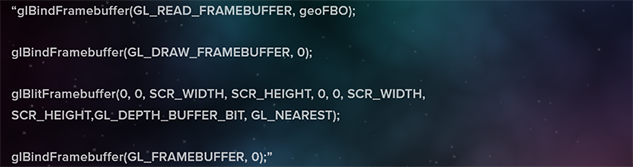

See the following code snippet:

That's a lot of API calls. But it's not complicated code: We take a framebuffer (basically a data structure that holds per-pixel information produced by a render pass by the graphics card) and take the depth information and transfer that to the default framebuffer, which goes to the monitor. This is called blitting. Blitting is really useful when you want to be fancy with framebuffers, and need to copy framebuffer data to the default framebuffer.

The above code runs and executes perfects on my 980. It throws errors on my Intel 4600.

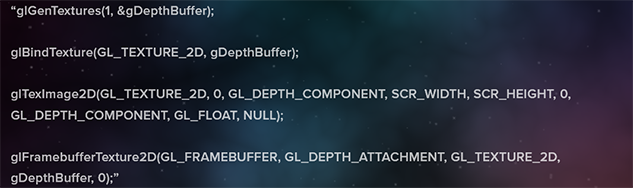

This took a long time to diagnose. Eventually, I narrowed it down to the creation of the framebuffer object in question:

This is how the depth attachment is being created, and it's entirely above board. This is how you're supposed to create depth attachments: Hell, it works on my Nvidia card without any issues whatsoever. So what gives, Intel?

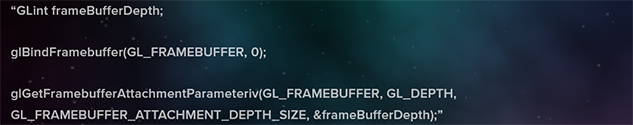

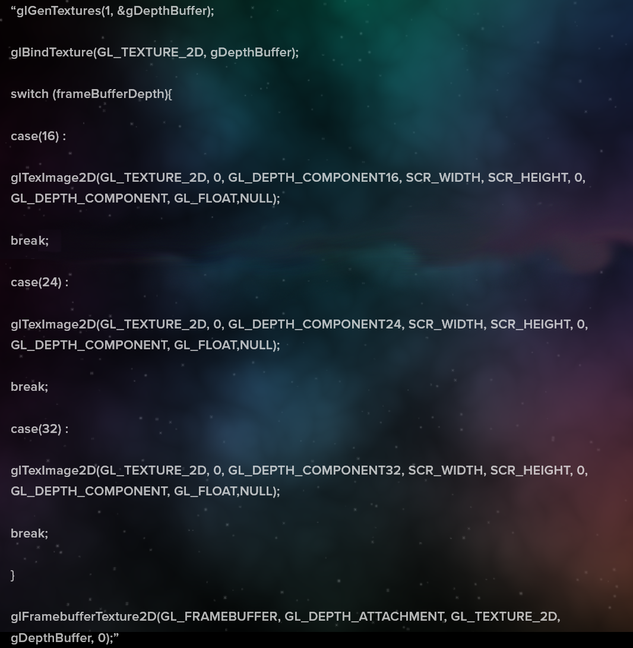

Here's an OpenGL top tip: If your code is doing erratic things on the screen, poll for errors and then see what function call throws it. Khronos' website documents every possible instance in which an error can be thrown. Using this method, I figured out that the blitting can throw errors if the format of the framebuffer being read from doesn't match the format being written to. So, bring out the duct tape:

and

Now it works.

If you are programming in OpenGL, and you intend to use blitting to default framebuffer, I highly recommend this jenky process to query how the default framebuffer is formatted. Hopefully this will be useful information to the 5 people that do that.

When creating an engine, you have to bend over backwards to accommodate drivers doing their own thing, and there is no a priori way of finding out what you need to do to make it work on every platform. Just trial and error.

In the next post, I'll talk about some of the awesome stuff you can do with graphics engines that make it all worthwhile. Until then, if OpenGL programming is your jam, I'd suggest:

- Learnopengl.com -> probably the best source of OpenGL material online.

- Youtube.com -> it's a small channel, but is very descriptive and got me started on this entire affair. Highly recommend.