This might not seem super cutting edge, but it's a surprisingly difficult and interesting question with multiple possible solutions. As with all problems worth solving, we shall begin with the parameters in question and the motivation.

Zero Sum Future uses an isometric camera around a planet. We want or players to be able to place buildings on these planets, and then click on said buildings to learn stuff about them, and maybe even interact with said builds in some capacity. Imagine, if you will, that we're concerned with interaction in any strategy game, and this blog post applies more or less as-is. For other applications, you might need some modifications to the method I'm about to present.

But first, I want to explain how we're not doing it. Traditionally, 3D picking (the act of figuring out dude, what's under my cursor) is done with a very basic method of ray casting. You have "collision" boxes for every entity that can be picked, cast a ray from the position of the cursor on the screen, and then check for collision. There are methods for running this on the CPU or the GPU, and you can use surfaces other than boxes, and so on. Because this is a very common method of doing things, there is plenty of literature online on how to do it.

The problem with the traditional method is that I'm lazy. There's a lot of math involved with ray casting, and I'm not good with math, and numbers, and anything linear algebra. I also think I need to construct the surfaces on the CPU side, and that code needs to be efficient, and I really, really don't feel like writing efficient code if I can avoid it.

Allow me to present a truly lazy, brute-force solution that is much more accurate than any ray casting: We assign each primitive on screen a pair of unsigned ints to determine what it is, write into the framebuffer, and then read it straight from GPU memory.

The following method presentation assumes a few things: One, I gloss over how I get the normalized device coordinates for the cursor (where the mouse is on the screen). If you are following along at home, Google your chosen context creation tool (SDL, GLFW, etc.) to get the method appropriate for you. Also, the method I use for identifying models on the screen might not be fine-grained enough for your purpose, but it should get the point across.

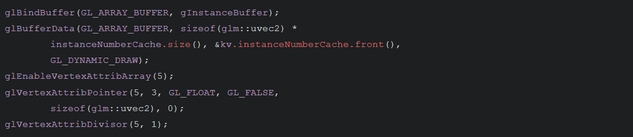

Okay, now the code. First, we need to assign our identifying pair of unsigned ints to our vertex array:

We use an instanced rendering approach in Zero Sum Future, so each model that will be rendered on screen has 2 identifiers: What that model is, and what the instance number is. The InstanceNumberCache container holds the instance data (along with some other goodies I use in my deferred shading pipeline), and the modelID we send in as a uniform. We also need to put in the attribute divisor, because we're doing instanced rendering and we don't need a jumbled mess in our vertex shader. I'm gonna gloss over the rest of the Vertex Array Object specification practice, because there are resources out there that do it much better than I could (Also, again, because I'm lazy.)

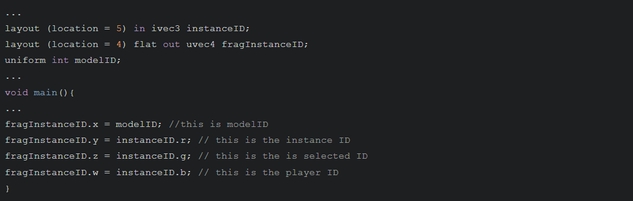

Now that we have our instance data, let us see how it works in the shader stages:

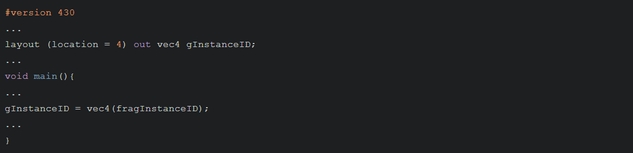

That was the vertex pass. You'll note that I have a bunch of other stuff in there, primarily because I use the instanceID information later down the pipeline. But you can of course trim up your identifiers however you wish. Next, let's move on to the fragment shader:

Well, that was easy. Now we have the entirety of the screen's identity written to our geometry pass framebuffer. Now, more astute readers will note that this is hilariously overkill: Why write the entire screen into a giant framebuffer if all you're interested in is only one pair? Like I said, I use the instance info in other shaders, so it's no trouble for my application. If you're only interested in 3D picking, just write the pair you want to a Shader Storage Buffer Object.

Now that we have the entire screen info, let's read it to get our cursor information. Here is how not to do it, see if you can spot the problem that took me about 3-4 months to find:

If you found the issue right away, you're much better at this than I am. Go on, give yourself a minute or two. No, it works, that's not the issue. But it's really, really slow.

See, for OpenGL to be able to actually read the info back to you from a framebuffer texture, it has to stop what it's doing and attend your request. If you remember from my last post, graphics hardware does not tolerate the CPU asking it for things. The above method forces a sync between the hardware, the driver, and the CPU, and whole operation grinds to a halt as you wait to figure out what's under the damn mouse cursor.

But there is a very clever OpenGL feature that solves this issue: Pixel Buffer Objects.

The real magic happens on line where we call glReadPixels. See, if OpenGL detects a pixel pack buffer bound before you call glReadPixels, it assumes that you want to use that buffer asynchronously. So the driver says "Fine, I got it, I'll get to it when I have time." Calling glReadPixels now takes absolutely no time, which is great. But we can't read from it right away – if we try to map it while the driver hasn't gotten around to transferring the pixel data we want, the system will hang again.

Not good – but the solution is very simple: We create many pixel buffers, as defined by maxMouseBuffers. Every render cycle, we swap these buffers around, so the buffer being mapped to be read from lags behind the rest of the pixel buffers that are on the driver's to-do list. Now, the driver has (maxMouseBuffers – 1) frames of wiggle room before it has to deliver, and with some fiddling with that number (for ZSF, triple buffering seemed to get rid of all the hanging) you can get the read time down to almost nothing.

There are some considerations with this method I should note:

- The reading of the cursor lags behind the request by at least maxMouseBuffers frames. In Zero Sum Future, I also do something very similar to get position vectors so we can position buildings as accurately as possible as well, so it lags behind even more. It's not really noticeable by human beings in our application, but if you're developing a very twitchy kind of game, this might be a consideration.

- Output of this method is pixel perfect – we pick whatever our cursor is floating over. While this is very cool, it is momentarily an odd feeling to people who are used to more generous hitboxes in other games. Our testing indicates that people get used to this method very quickly, but there is some adaptation period.

- What about applying this method to other games? In a first person shooter, you can use this method with hitscan weapons. In that application, the mouse coordinates would be the crosshairs, and you might introduce a bit of sway to your camera to mimic accuracy drop-off while moving. I can imagine a game with beam weapons making use of this method.

Well, that concludes how we do 3D picking in Zero Sum Future. I'm not going to claim it's cutting edge tech, but I think it's a neat, low impact solution. I would recommend it to anyone foolish enough to make their own engine from scratch.