Distortion Effect

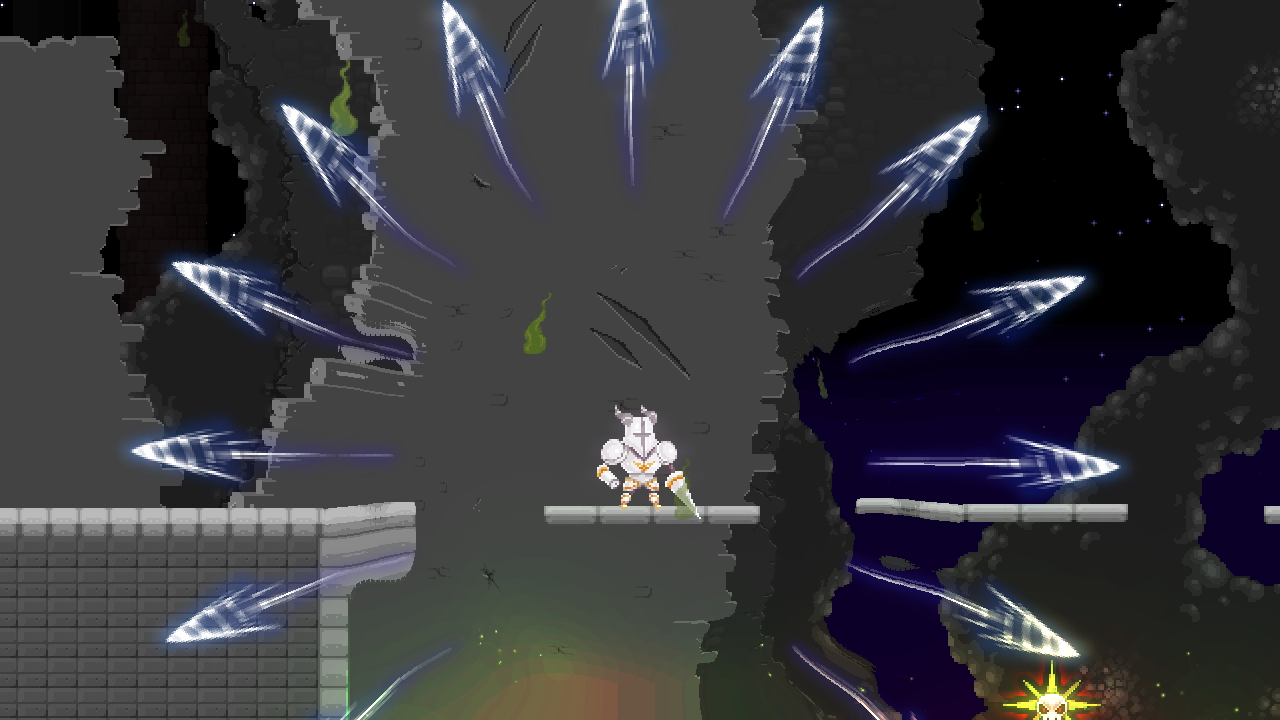

As requested, here is a quick overview over the distortion effect! In the image you can see the effect used for an attack you can unlock via a perk.

Low Quality GIF

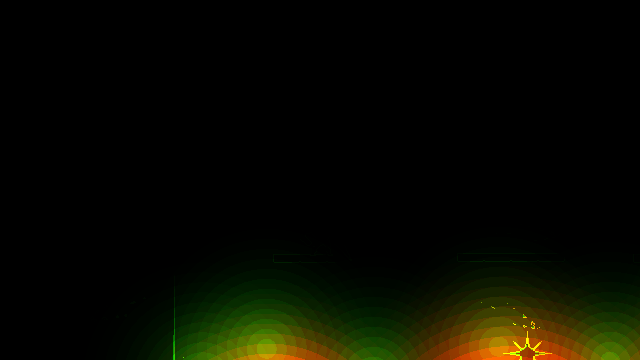

Final image

Quick overview over the state of the pipeline.

Distortion Stages

Distortion is treated as a full-screen post-processing effect. That means it takes as input a pre-stage and outputs a post-stage render target. In this case, the input is the world render after glow is added.

Distortion render target

A post-processing stage is defined like that:

{

void Apply(RenderTarget2D _pre, ref RenderTarget2D _post,

SpriteBatch _spriteBatch);

void SaveRTs(string _dir, string _time);

}

I use a separate render target to render the distortion information to. I then draw the pre-stage texture to the post-stage render target, sampling from the distortion texture.The distortion texture contains offset information for each pixel on the screen. The information is encoded in the following format:

R: X-Offset to the left

G: X-Offset to the right

B: Y-Offset upwards

A: Y-Offset downwards

You may ask why I don't just use the red channel for x- and the green channel for y-offset, interpreting 0.5 as no offset.

Splitting it up in four channels allows me to render each distortion effect additively. If two effects overlap the distortions will either cancel out or be added together.

rgba(0, 1, 0, 1)+rgba(1, 0, 1, 0)=]rgba(1, 1, 1, 1)

offset (1,1)+offset (-1,-1)=offset (0, 0)

Again, as mentioned in the last post, this was not my idea, all credit goes to Eric Wheeler!

So, how does the distortion target actually get filled?

1. Render stuff to the distortion render target

device.Clear(Color.Transparent)

foreach (DistortionBaseEffect effect in effects)

{

effect.Apply(_spriteBatch, _pre);

}

I have a base class called "DistortionBaseEffect". The class is an interface which is responsible for rendering to the distortion target. This allows me to implement different methods to generate distortion. At the moment I have implemented rendering:

- Textures (using the alpha channel for distortion strength)

- A wave effect (used in the gif)

Each method uses a different pixel shader. Those shaders are the same except for an offset function:

{

float2 offset = offsetFunc(TexCoord);

float4 distortion = float4(0,0,0,0);<

if(offset.x < 0)

distortion.r = abs(offset.x);

else

distortion.g = offset.x;

if(offset.y < 0)

distortion.b = abs(offset.y);

else

distortion.a = offset.y;

return distortion * 5;

}

Textures

I can use any texture I want for distortion. In fact, I can just attach the "TextureDistortion" to a GameObject with a sprite attached to it and it will automatically render the sprite to the distortion target instead of to the regular world target.The offset function looks like that:

{

//Sample from texture

float4 dist = tex2D(TextureSampler, _pos);

//Calculate offset from alpha

float2 offset = float2(dist.a, dist.a*1.5)*strength/100;

return offset;

Wave Effect

I implemented the wave effect to get a smoother result than I'd ever get from using a texture. I also have more control over parameters like the speed, radius, height and width of the wave.

{

//Scale texture coordinates appropriately

_pos -= radius + width;

_pos /= scale;

//Length from the center of the rendered quad

float x = length(_pos);

//Calculate offset strength

float val = cos((x - currentRadius)/(width/PI)) * strength/2 + strength/2;

//Clip for ranges outside the width

if(abs(x - currentRadius) > width)

val = 0;

//Offset in the direction from the center

return normalize(_pos) * val;

}

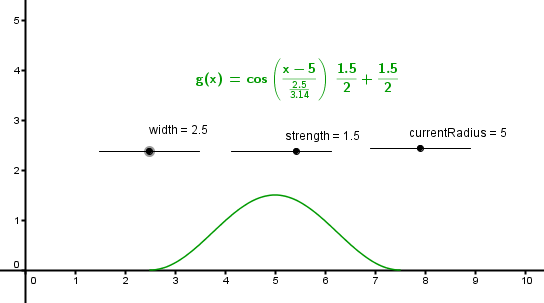

I built the function for the wave in GeoGebra. It looked like that in the program:

2. Render distortion to the post-stage target

To get the final output I first render the pre-stage target without any effects. This has to do with a sampling issue I had where there was some slight distortion in parts where there shouldn't be any. This was fixed by clipping the distortion render for pixels with a total distortion under a certain threshold.

distortionApplyEffect.Parameters["distTex"].SetValue(distortionTarget);

distortionApplyEffect.Parameters["screenDim"].SetValue(GraphicsEngine.Instance.Resolution);

_spriteBatch.Begin(SpriteSortMode.Immediate, BlendState.Opaque, SamplerState.PointClamp, DepthStencilState.None, RasterizerState.CullNone, null);

_spriteBatch.Draw(_pre, GraphicsEngine.Instance.ScreenRectangle, Color.White);

_spriteBatch.End();

_spriteBatch.Begin(SpriteSortMode.Immediate, BlendState.Opaque, SamplerState.PointClamp, DepthStencilState.None, RasterizerState.CullNone, distortionApplyEffect);

_spriteBatch.Draw(_pre, GraphicsEngine.Instance.ScreenRectangle, Color.White);

_spriteBat

I then render the pre-stage target again, this time with the distortion apply effect. This effect is responsible for sampling distortion information from the distortion target and using this information to offset the texture coordinates and then sample the color of the pre-stage target from the calculated location.

{

//Sample from the distortion texture

float4 sampleOffset = tex2D(DistortionSampler, screenPos)/5;

//Calculate final offset

float2 offset = float2(0,0);

offset.x = -sampleOffset.r + sampleOffset.g;

offset.y = -sampleOffset.b + sampleOffset.a;

//Clip if under threshold

clip(length(offset) < 0.001 ? -1 : 1);

//Sample from new position

return tex2D(TextureSampler, screenPos+offset);

}

Conclusion

Whoa, this post is long... I hope I explained everything adequately. If not, feel free to ask questions!

An open task right now is, that I could use depth information to decide if an effect should be rendered behind or in front of an object. This could add a lot of depth to the effect and make it useful in situations where there is a lot of parallax going on and not occluded distortion might be obvious.

Like the effect.I didn't read the post(or at least most of it) but I will look into it.Seems interesting.

This is confusing to me, I'm not so good in maths and stuff.