This is a writeup of the way the AI is in the current dev version of Orbital Assault. This may change in development, but should give some idea of where we're headed.

Pathfinding

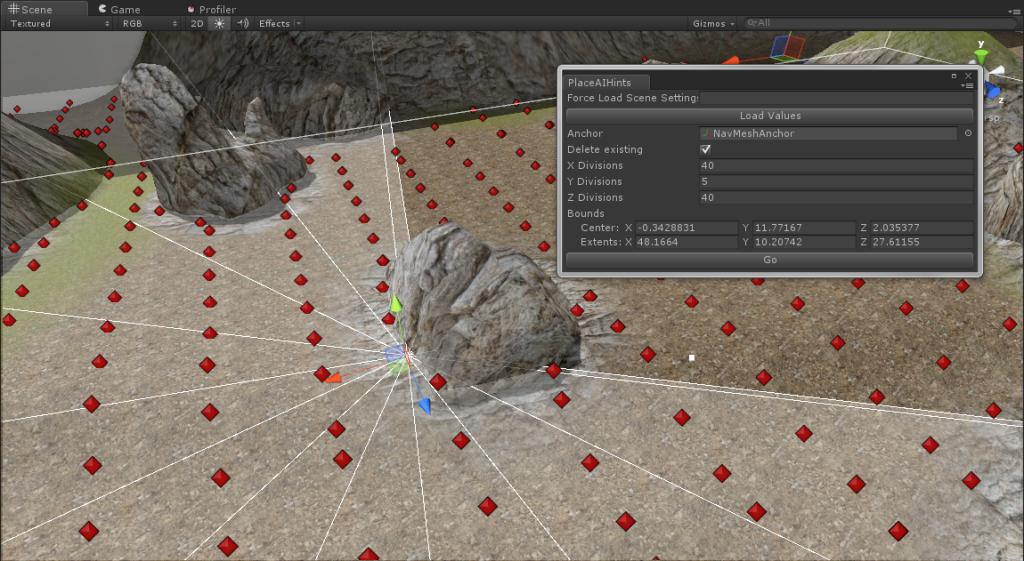

This is the first ingredient. Decision making aside, our bots need a way of determining where to go, and how to get there. First of all, we decided to use Unity's built-in navigation system. That is, all bots actually internally use the NavMeshAgent component to decide where to go. They still use the game's physics system however (NavMeshAgent velocity is translated into virtual input axes). Still, we need a way for bots to be able to pick areas to wander, where to navigate for line of sight, where to take cover, where to throw grenades, etc. To this end, I decided to add an AI hint system. AI hints (or "waypoints") would be scattered around the level. They contain various bits of data which is useful to the bot, like information about what kind of cover it provides. We plan on supporting large open levels however, so placing these points by hand is out of the question. I created an editor utility to place the hints automatically. It works by allowing you to specify a box within which points are to be placed, the divisions of that box in the X, Y, and Z directions (points are placed via a 3D grid of raycasts - think of it like poor man's voxelizing), and a "nav mesh anchor". All points are then constrained to be on the NavMesh via NavMesh.SamplePosition.

A screenshot of the tool in action, with one of the generated waypoints selected and showing the calculated cover

The nav mesh anchor was used to solve an interesting problem. That is, between two navmesh islands, Unity will always report that there is a valid path, even if (obviously) no such path exists. It will return a path that gets as close as possible, but never quite reaches the destination. There's also no way to query whether two points lie on the same island. Therefore, when creating the AI hints, I calculate a path between each point and the nav mesh anchor. If the path is incomplete, the point is pruned. This guarantees that all waypoints on the map are reachable from a given position (in my case, next to one of the team's spawns).

As far as cover, I took inspiration from Killzone. That is, each point stores sixteen distance values, each representing a different "wedge" of a circle. When checking whether a point is cover in a particular direction and distance, you find the distance value of the appropriate wedge. If it's less than the target distance, the point provides cover.

Decision Making

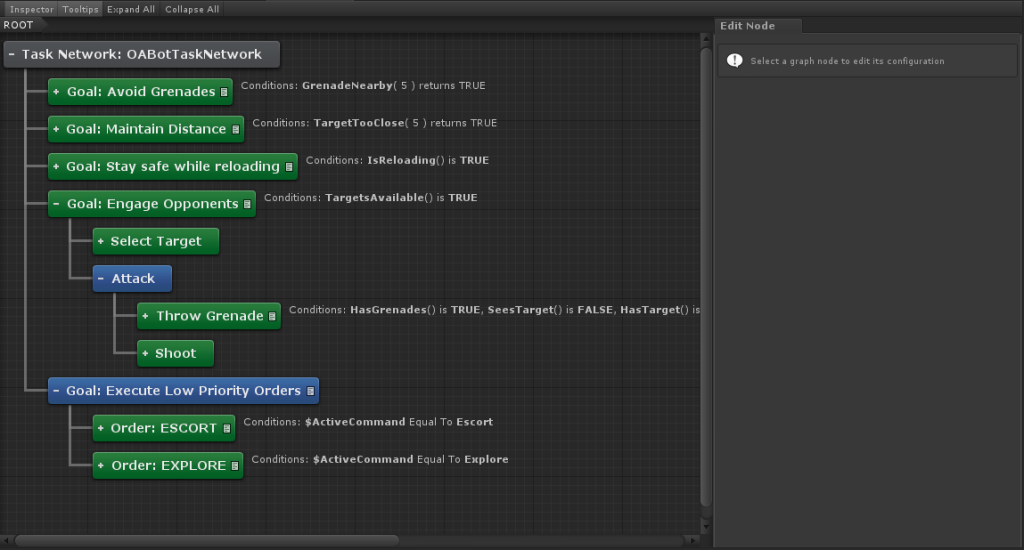

For decision-making, we're using a technique called Hierarchical Task Networks, or HTNs for short. HTNs are a type of planner - the output of an HTN graph is a plan, a sequence of actions intended to carry out an end goal. A related technique is GOAP - Goal Oriented Action Planning. GOAP uses A* to connect actions together in order to produce an end goal. This results in highly emergent behavior (as the planner can combine any sequence of actions to produce a desired end result), but is also performance heavy. Additionally, plans in games like first person shooters tend to be incredibly short - often just a handful of actions - so the power of GOAP can be overkill. HTNs, on the other hand, are very light on CPU. While not as automatic as GOAP, they do provide much more control over how the AI behaves. They are sort of a cross between GOAP and Behavior Trees. They're like GOAP in that they produce plans which attempt to satisfy end goals (and can analyse and predict world state to do this), but look a lot like Behavior Trees.

Fun fact: a friend of mine is working on the HTN system itself. You can sign up here if you want to participate in beta testing.

The bot AI in Orbital Assault is separated into three tiers. There's the individual bots at the lowest level, which are organized into Squads, which are managed and given orders by the Commander. Information flow goes something like this:

- Commander gives an order to a Squad.

- Squad interprets this order and translates into orders for each individual member.

- Each member follows this order as part of its own decision-making logic.

So, for instance, in a CTF game the Commander might tell a Squad to retrieve the flag. That Squad might then tell one of its members to go get the flag, and tell its other members to escort the member assigned to retrieve the flag. The Commander and Squad are both hardcoded (the logic behind them is extremely simple, so an HTN would be overkill). The individual bot AI is however implemented via HTN, and as it stands the HTN looks like this:

I've structured the graph such that all of the top-level nodes are goal nodes - they are intended to satisfy a goal. The children of these goal nodes then specifies how to accomplish that goal. Each goal has a condition attached to it (except for the last one). The tree is analysed in top-to-bottom order. The HTN will execute the first goal it finds where the conditions are met (that is, the first goal it can satisfy). Conditions also include the conditions of children - if the child of a goal requires that the AI have, say, grenades in their inventory, the HTN planner will not explore the goal if the AI has no grenades.

So, goals on the top automatically have a higher priority than goals on the bottom. In fact, in this tree, the AI will not execute any orders as long as it is preoccupied with engaging targets or staying alive (at the moment, only two orders have been implemented, both of which are low-priority). In the future there will likely also be high-priority orders (to be executed as long as the AI isn't busy trying to stay alive - such as running away from a nearby grenade - but more important than engaging targets).

There's another part of decision making as well, and it has to do with the AI waypoints. That is, we use the waypoints previously mentioned for a great deal of things.

- For wandering. The bot locates nearby waypoints and picks one roughly in front of it (with a randomization factor)

- For shooting. When shooting at the target, the bot picks a nearby waypoint which has line of sight to the target.

- For taking cover. The bot picks a nearby waypoint that provides cover against the target

- For throwing grenades. The bot picks a waypoint to throw the grenade at that satisfies the following conditions:

- Is close enough to the target to deal damage

- Has line of sight to the target

- Has line of sight to this bot

Conclusion

This has been an overview of the multiplayer bot AI as it currently stands in Orbital Assault. While by no means complete, I hope it shows you the kind of effort we're putting into Orbital Assault and, if you're a developer, gives you some inspiration of your own. If you ARE a developer, I also highly recommend that you take a look at the HTN planner by StagPoint Consulting, as they are currently looking for beta testers.

Happy holidays from us here at MoPho' Games.

- Alan Stagner