Introduction

I have come up with a “plausible” language system design and have been working on it. I start with recognizing sentence types (normal sentence, question and order) using a Neural Network with Batch Gradient Descent.

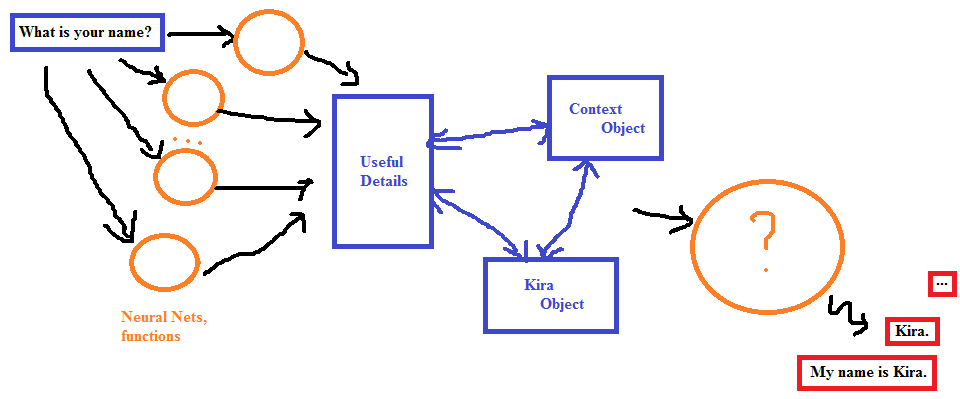

System Design

This system’s goal is to simulate smart chatbots. It takes a sentence as input and is expected to produce a reply as output. For example:

- Input: What band do you like?

- Output: I like Pantera

- Input: Any others?

- Output: I’ve been listening to Iron Maiden too, they’re solid.

The player will produce input by typing into a textbox and the kids will reply.

Since we want the kids to remember the context of the current conversation, their relationship with the speakers, as well as inserting their own opinions, feelings and thoughts into their interactions, a simple sentence by sentence matching system won’t work.

Instead, I will run the input sentence through a few different neural networks and functions to extract a bunch of useful details. These details will then be combined with the context object, the kids’ personality and state objects to construct a reply.

We will want to extract the following details;

- Sentence type (Normal sentence, question, order, etc)

- Question type (Ask for reason, fact, mean/way, etc)

- Main subject

- Main object

- Main verb

- Main adjective

…

For example:

What is your name?

- Sentence type: question

- Question type: fact

- Main subject: listener

- Main verb: be

- Main object: name

He loves cake

- Sentence type: normal sentence/inform

- Main subject: he

- Main verb: love

- Main object: cake

Recognize sentence type with Neural Network

I will demonstrate my steps building a neural network which can recognize the sentence type of the input in this section.

Since many things are still unclear to me, any suggestions, corrections and clarifications are well appreciated.

I also believe this article is a good start. If you’re familiar with Neural Networks and interested in smart chatbots in games. Feel free to go along!

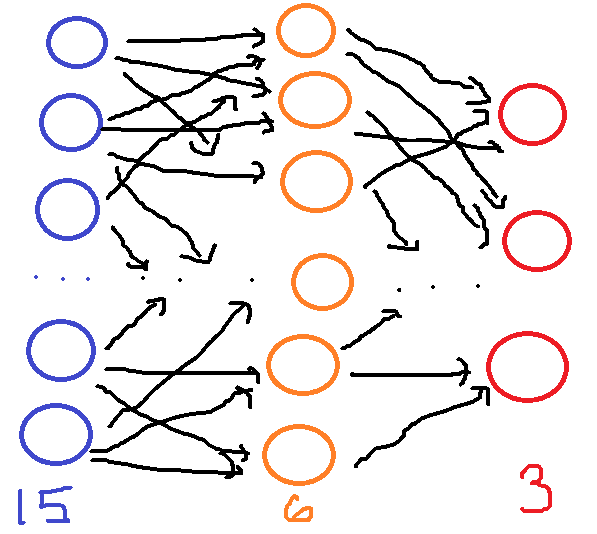

Step 1: Define the Neural Network

I split the input sentence into words, instead of characters or chunks of data so I could control the vocabulary of the kids. The “?” and “!” mark are each counted as a single word.. The maximum number of words an input can hold is 15. That should be enough to cover most “meaningful” sentences in our game context. So we will set the size of the input layer to 15.

Observation: If our date set is large enough, reducing the size of the input layer to around 10 won’t improve our algorithm performance much!

The output layer will have 3 neurons, used to classify Normal sentence, Question and Order. I use Sigmoid function and 6 hidden neurons in a single hidden layer.

The Neural Net model without bias neurons

I started with a Neural Net using Stochastic Gradient Descent but eventually switch to Batch Gradient Descent. The main reason is that my Neural Net model in C++ didn’t support batch learning so I wanted to implement that. I also find SGD a little bit unstable. I haven’t generate much data so if I unluckily end up with a bad shuffle before running SGD, the performance will be really poor. Also, since I don’t understand SGD’s momentum well enough, I had to spend too much time tweaking it and started to lose control over the performance. So even when SGD fits Kikai so well (online learning), I will still stick with Batch learning for now, to reach a decent stability as well as gaining more experiences (my first time running batch learning in C++).

Step 2: Generate and process Data

We can easily build a list of common nouns, verbs, adjectives then write a program to generate normal sentences, questions and orders from these words. My current data set has 500 sentences, which I mostly generate by hand and copy-pasting patterns. It’s easier to manage for now.

normal.txt

Because I am tired

Clean food is good

Dinner is ready

Food need to be clean

Goodbye

He is bored

...

questions.txt

Are you alright?

Are you fine?

Are you happy now?

Are you hiding something?

Are you hungry?

Are you okay?

...

order.txt

Admit it

Answer me

Be careful

Be quiet

Be yourself

Beat it!

...

You can get the full data set here.

I then read the sentences from the files, extract words from them then fetch into a std::map like this:

std::map<std::string, int> words;

...

if (words.find(newWord) == words.end()) {

words.insert(std::make_pair(newWord, words.size());

}So now each word is labeled with a different number. We can then transform the input sentence into a std::vector of these numbers to fetch our Neural Net. I thought of 2 different ways to order the words:

Either place the words right at the head: [ what, is, your, name, -1, -1, -1, … ]

or in the middle: [ -1, -1, -1, -1, what, is, your, name, -1, -1, … ]

I quickly tested both version and their performances are pretty close if our data is large enough. Placing the words in the middle seems quite interesting but the first way feels more stable to me.

I then perform a simple feature scaling. I don’t know what is the best way and why not doing this hurts the algorithm a bunch. For now, I’m simply dividing each number in the vector by the number of known words:

res[i]/= words.size();With that, we have been able to set up the training data. A training case should look like this:

- input: { 0.317073, 0.198171, 0.131098, 0.344512, 0.0121951, 0.0152439, 0 0 0 0 0 0 0 0 0 } // What is your name?

- output: { 0, 1, 0 } // Question

We can also write a simple function to auto-correct unknown words, like “wat” -> “what”, and transform plural nouns to singular, singular verbs to plural (“dogs” -> “dog”, “eats” -> “eat”), etc. More on this on upcoming articles.

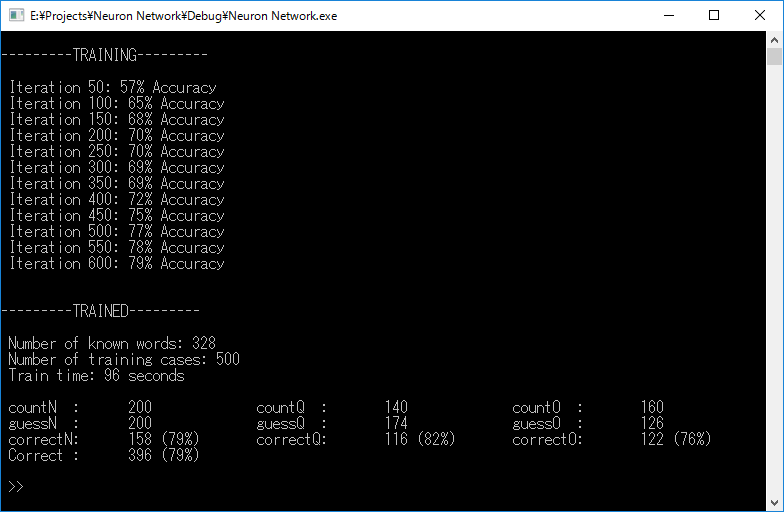

Step 3: Training

With the data ready, I train the Neural Net with Batch Gradient Descent. Since I have very poor data, only 500 sentences for 328 different words, I have to run Gradient Descent with a small learning rate (0,025) for 600 iterations! The result is below, which can be said to suffer from both underfitting and overfitting (What???). I couldn’t even reach 80% accuracy on the training set, need better data!

I’ve also recorded a video inspecting the trained neural net. Check it out!

So I’ve been able to create a neural net that works, but not well. It can’t fit the training well enough, reaching only 77%~79% accuracy on different runs. I will start working on the data first, then tackling more minor problems that I’m still unsure of. Like trying different hidden layer numbers and sizes, tweaking the learning rate, etc.

Again, I will be very happy if we can form a group working on smart chatbot, not specifically for Kikai, but for games in general. Any comments, corrections, suggestions and questions are well appreciated.

Anyway, of the 5 problems that I’ve mentioned in the first article, this article only touches a small part of the first one, many more discussions are coming soon, stay tuned!

References

Online courses for Machine learning:

- Machine Learning, taught by Andrew Ng

- Neural Networks for Machine Learning, taught by Geoffrey Hinton

Implementing Neural Networks in C++