Hi guys,

Glad to know you're reading today's monthly report and even happier to bring you the following news: patch, estimated release date, fresh hard sci-fi lore.

PATCH #051716 RELEASED

FIXES:

Mechanics:

• The bug with inventory listing when you put the object from active slot to inventory is fixed.

• Bug when you're unable to drop object on the floor fixed.

• Stacking system amended.

• Items identification bugs fixed.

• Tutorial pages now can be slid correctly.

• Items activation while switching items fixed.

• Saving/Loading date while travelling between levels reworked.

FEATURES & CHANGES:

Gameplay:

• Ivy monodialog [first aid] added.

• Ivy monodialog [recover] added.

• Ivy dialog [warning] added.

• Quanton monodialog [NBC] added.

• Quanton monodialog [firealarm] added.

• Quanton dialog [broken] added.

• Free talk dialog with Ivy as a companion added.

• Tutorial "Character Skills" added.

• Tutorial "Hacking Electronic Devices" added.

• Tutorial "Lockpicking Mechanical Locks" added.

• Tutorial "Item Identification" added.

• Tutorial "Identifying Creatures" added.

• Tutorial "Journal Records" added.

• "What Makes a Terrorist?" Journal Record added to gameplay.

• "Gigantic Red Zones Amoebas" Journal Record added to gameplay.

• "R/K Selection Theory When Applied to the Status of "Survivors" Journal Record added to gameplay.

• "Quanton Hubs" Journal Record added to gameplay.

• "O'Shea's Cooperation Speech" Journal Record added to gameplay.

• "Artificial Intelligence" Journal Record added to gameplay.

Graphics:

• Screen Resolution selection launch screen for MAC version added.

• New Quanton NPC model (broken and fixed) and its VFX added.

Interface:

• Augmentation panel added to Character Menu.

• Need to lock other interactions while in game Main Menu is open.

Mechanics:

• Quantity dialog window when move only one object now does not appear.

• Pop-up text mechanics (Fallout 1/2/T style) for monodialogues and NPC taunts added.

Other:

• Dozens of new items.

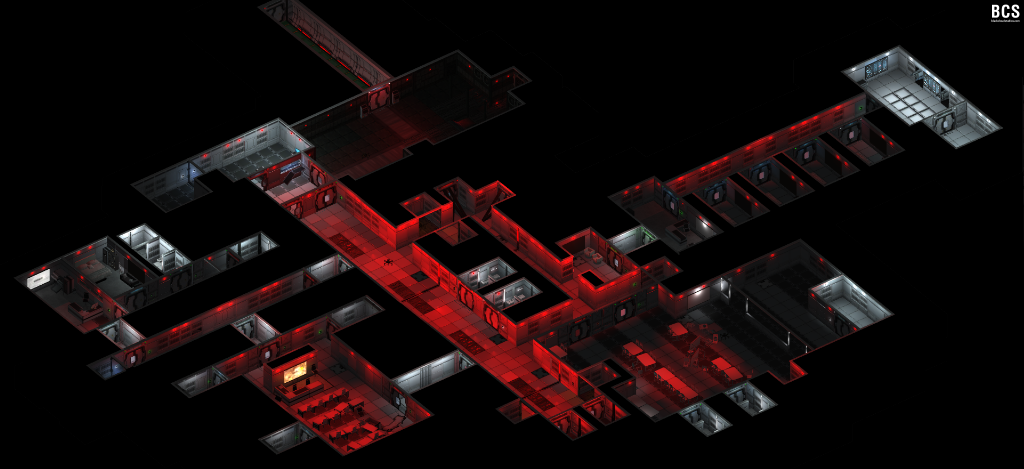

• Trash containers authentically added to Special Facility and Science Facility levels.

• Final containers (contents/types/states/descriptions/sounds) for Special Facility level added.

• Final containers (contents/types/states/descriptions/sounds) for Science Facility level added.

IMPORTANT NOTES:

1. Sorry for delay with the update: it was ready around a week ago but we found the critical bug in the final build - Kaspersky deleted (!) the whole build relying to "suspicious behaviors" when the character travel between levels. We were not able to find out origin of the bug in our scripts for a week; hence, we had to rewrite all save/load system from scratch symbol by symbol. Now it works smoothly.

2. I know, with this huge update, it might look like we "finally" got to development just a couple months ago. Frankly, we joke a lot about within our team ourselves. But the case is the major work was done for that year before – the major work for creating content and engine/constructor fitted to our SACPIC game mechanics. We go right step by step like Bioware did for Neverwinter Nights 1: it took around 3 years of 75 programmers and 45 testers (10 internal, 35 external) as well as 10 million dollars just to create an engine/constructor fitted to DnD 3.5 mechanics, and only after that they get to sewing campaign on it. That was actual in good old 2002. Modern technologies, social support and crowd founding gave us a boost but we still follow the same passage and still face the same issues :)

3. Mac users, now you can select screen resolutions when launching the game as well as running it in windowed mode. It looks beautiful, but I recommend to use resolution not wider than 1920 for now because current Macs might be lagging running the game on 4k resolution. E.g. I prefer to play in on Mac with 1200 resolution windowed. I know, Mac suffer some visual bugs in Main Menu but we’ll fix that on further updates.

4. You might encounter many dialogues with Ivy in this build, albeit she is represented by painted avatar still. We got her model but I decided it is not as cool as other content of ours. She’s too important (as the companion for the decent part of the game) to be neglected. Freelancer did her model, so now our lead 3d modeler redoes it himself. I’ll present you her "old" version with comparison to "new" one in the next update.

PROLOGUE RELEASE ESTIMATED DATE

One of the most inspiring part of today's news is that, based on our current stage and pace of development, as well as on our current human and financial resources, I recon to complete and delivery the prologue by the end of this summer. I know this is just an estimation, but it is a realistic one.

The game core become more and more sophisticated with every monthly update. It happens very often when amending one bug that we reveal a couple of new ones. The major threat to this plan of release is the human factor (frankly, as it's been the case during the whole development). I'm doing my best to attach a new spare programmer to the development (at least for bug fixing) but if we lose our current programmers that might slow us down again. I'm praying with you that won't happen and we'll go through this coming three months smoothly and release a great product.

Beyond that, if it goes according the plan, we need to look in the future. In the next update, I feel like to share the plans with Co-Founders, coordinate it with them, then (in August) share updated plan with Kisckstarter backers to ask their thought on it. If it run fine again, we'll share the plan with everybody else after release of the prologue by this Fall.

8,000 WISHLIST FANS REACHED ON STEAM

In previous update I posted Steam statistics about 7,000 wish lists collected and how that milestone has inspired us. It looks like my news found some traction within the hearts of our fans that follow the development process. For this month amount of wish lists has increased to 8,200!

Thanks for adding us, guys. This growing number of wish list adds will definitely help us by the release date.

The art above is painted and cordially shared as the illustration by one of our favorite contributor-artists Antony Fedotov who worked on the cover art of Fall of Gyes. This art you see is inspired by New Reno capital of the New Confederacy from After Reset setting :)

ARTIFICIAL INTELLIGENCE LORE

At this current stage we’re working hard on Ivy and Quanton characters. Both of these NPCs are virtual intelligences. For several weeks we received a lot of questions from our fan, littleONE, about Artificial & Virtual Intelligence science and its place in the hard sci-fi setting of After Reset.

I decided to prepare some extra lore about that issue and include it into the prologue. You might read about it here or discover it in current build by yourself. But I’d like to show you how the hard sci-fi approach is different from casual "fast-food" approach (e.g. for many, the subject of AI typically resolves into "AI is a threat" or "Kill all humans").

Here you can read a part of an article from a public official from the Human Rights Watch. By 132 A.R. the HRW is one of the Social Corps Departments that monitor and enforce compliance for a ban on AI research and development within the United Governments. I hope, you'll enjoy it:

"Specify the way in which you believe that a man is superior to a computer and I shall build a computer which refutes your belief. Turing’s challenge should not be taken up; for any sufficiently precise specification could be used in principle to program a computer."

Karl Popper, The Self and its Brain

When thinking about Artificial Intelligence and artificial consciousness, our forbearers often began from the premise that there are only two types of data processing: artificial and natural. But, we can now be certain that this premise was mistaken.

The conceptual difference between artificial and natural systems was never definitive nor exhaustive. For a long time before the dawn of modern synthetic biology, long before the Reset, we already had intelligent and conscious systems, that could not have been said to belong to either of these two categories. As for the other outmoded division between hardware and software, once again, in the Past Age, we had biological systems that could be controlled by artificial (anthropogenic) software, while at the same time, there also existed artificial devices controlled by programs that arose all on their own (via evolution).

But, having touched on issues of integrated biological neuron networks and non-biological machines as a type of "accelerated evolution," we are already reaching the problem of the Ethics of Consciousness. Here it is worth noting that we still do not have a functional theory to explain consciousness but, if we did, there would be no guarantee that it would apply equally to Artificial Intelligence in non-biological carrier.

Before research in the field of Artificial Intelligence was made illegal, and Evolutionary Robotechnology studies were limited, more than a century before the Reset, we had already begun to create the first self-modeling and self-cognizing robots. The most obvious example is the "starfish" made by Josh Bongard, Viktor Zykov, and Hod Lipson. Over the course of their existence, these robots gradually developed an internal model of themselves, a model of the surrounding world, and a model of their places in it. These four-legged robots made use of these models to perform cognitive functions and interact with the world around them. When some legs were removed from one of these "starfish," it began to adapt its model to the new conditions, working out new approaches. In concrete terms, they learned to limp. Differently from human patients with Phantom Limb Syndrome, these robots were able to restructure their concept of their own body after losing limbs. These "starfish" were not only able to synthesize a personal model of the Self, but also use it to give rise to intelligent behavior. Of course, these first models of the Self were unconscious, but as many readers remember, they were successfully used to help making the first computer models of human evolution.

The breakthrough in Artificial Intelligence research that followed was also correlated with breakthroughs in human neuropsychology. Before that, neuropsychology defined three things as the basis of consciousness as we know it: an integrated model of the surrounding world with feedback, which can be accessed by all parts of the mind; living through this model in the "here and now;" and the fact that the artificiality of this model of the world and the "here and now" are hidden from the conscious mind. These very factors were applied to humans as well: we have a virtual model of the world (though it may be inaccurate, limited, and contain delays and distortions), we have virtual model of us as the organisms (remember Phantom Limb Syndrome above), we can "feel" as we live in the "present" moment where we are and, from the beginning of our lives (and for some, for their whole lives) we do not recognize the artificial nature of these structures.

However, what our forbearers, the creators of the "starfish," did not consider - was the presence of a fourth, connecting factor, that allows the trespassing the border from Virtual Intelligence to Artificial Intelligence: the simultaneous detachment and backward integration of models of the Self into an already-formed virtual picture of the world leads to begins to recognizing/cognizing itself. It becomes the Ego and a naive realist regarding everything its model of the Self does claim about. Such an Artificial Intelligence would believe (!) in itself.

THE ETHICS OF CONSCIOUSNESS AND ARTIFICIAL INTELLIGENCE

One of the first questions I get from schoolchildren at the beginning of my Conceptual Robotechnics course is, "If we can make Artificial Intelligence, why don’t we? And why does the government forbid all research this field?"

I am reminded of our conclusions in the previous chapter: backward integration of a model of the Self in virtual form into the world "here and now" will allow these systems to see themselves in this world, and in this place and time. The system begins to recognize/cognize itself. And that change turns this artificial system into an object of moral agency: it now becomes potentially capable of suffering. Pain, fear, negative emotions and other internal conditions, which reflect part of reality as "undesirable" can serve as reasons for suffering only if they are realized. A system that is not revealed to itself (for example, our modern Virtual Intellect systems) cannot suffer, in that it is deprived of a sense of ownership. These systems are like rooms, in which a light is turned on, but no one is home. They cannot be objects of ethical consideration a priori. If such a system has a conscious model of the world (even if it is more accurate and full than a human’s), but no model of the Self, then we can pull the plug at any moment with our minds at ease.

But Artificial Intelligence is capable of suffering, because it integrates pain signals, the condition of emotional distress or negative thoughts in its transparent model of the Self, and they are declared not an abstraction, but someone’s (!) pain or negative feelings.

Here let us make a thought experiment that is a brilliant illustration of the potential moral issues of experimenting with Artificial Intelligence like this. Imagine that you are a member of an SOC ethics committee considering whether to award a science grant to a medical research project.

The author of the project says: "We want to use genetic technology to propagate mentally disabled children. For the study, we need children with certain intellectual defects, emotional insufficiencies and perception deficits. This is an important innovative research strategy, which requires controlled and reproducible research on the mental development of mentally disabled children after birth. This is important not only to further our understanding how our own brain works, but also has a huge potential for curing several mental illnesses. That is why we need the funding immediately. Do the right thing!"

Beyond a shadow of a doubt, you find this idea not only absurd, but also dangerous. One could confidently suppose that such a proposal would not be approved by any ethics committee in this world.

Albeit, the first generation of Artificial Intelligence, or artificial consciousness, unlikely will find defenders in ethics committees just like the one described above. The first generation of machines that satisfy the minimum sufficient set of conditions for present living and self-consciousness would find themselves in a situation similar to that of the genetically engineered mentally handicapped human children. And just like those babies, the first Artificial Intelligences will unavoidably have all types of functional, cognitive and presentational dysfunctions, regardless of the fact that they may have been conditioned to have them by errors in the experimental design. It is completely possible that their perception systems: artificial eyes, ears, etc., won’t work very well at first. They will be half-deaf, half-blind and will have to struggle with potential difficulties in perception of the world and their own places in it. Together with that, having a self-consciousness, they will be capable of suffering.

If their model of the Self is rooted in low-level self-regulation mechanisms in its hardware (i.e., just like our own emotional model of the Self is rooted in the upper brainstem and hypothalamus), then they will consciously live all the pain and emotions. It will be painful for them to go through losing homeostatic control because they have a built-in perception of their own existence. They would have personal interests and, as such, would subjectively live that fact. They would be able to suffer emotionally in a way that is qualitatively different from us in form and intensity, that we, its creators, would be powerless to even imagine.

All the same, the fact is that the first generation of such Artificial Intelligences, will probably have a great many negative emotions, brought about by self-regulation failures due to hardware and software defects. These negative emotions will be recognized and intensely felt by the Artificial Intelligences themselves but, most likely, we will not be able to understand or even discern that they are present.

Let’s try to continue our thought experiment… Imagine that the next generation of Artificial Intelligences, which would be more developed, begin to attach more complex cognitive characteristics to their model of the Self. In this case, they can already conceptually understand not only the quaintness of their existence as simply objects of scientific interest, but they will also be able to suffer intellectually from recognizing their own "inferiority." Further generations of such Artificial Intelligences will be able to fully recognize themselves as second-rate, though also intelligent beings, used as disposable experimental subjects. Just think what it must be like to “wake up” a developed artificial being, which, despite having a firm sense of identity and a personality that experiences itself, is nothing more than a mass-produced product.

This story of Artificial Intelligence, of a being artificially endowed with self-consciousness, but without human or civil rights, or any kind of societal lobby, is an excellent demonstration of how connected the ability to suffer is with consciousness itself. It also is a principled argument for the unacceptability of creating Artificial Intelligence for the purpose of academic research. We should not increase the total amount of suffering in the universe without necessity. And creating Artificial Intelligence systems would be doing just that from the very outset...

Roger Simmons,

Social Corps Department of Human Rights Watch.

* * *

That is all for today. Getting back to the development.

Thanks, guys, for rooting for us and for your outstanding patience!

See you in a month ,or daily on our forum and Steam.

Add us on Steam Early Access.

Visit After Reset RPG official Store.

Join After Reset RPG official website.